Jenkins projects at my current workplace are really heterogeneous, e.g. we’re using TFS and Git for SCM’s, building on CentOS and Windows alike, from Java to .Net projects. Lately, NodeJS was added to the mix. There is a variety of approaches for building NodeJS projects in Jenkins out there, but none of them really fit our case. The reasons why:

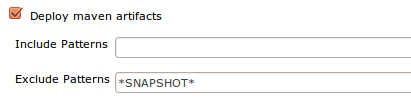

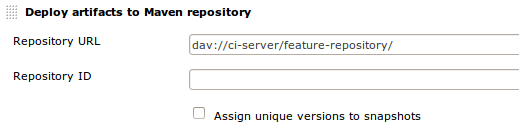

- Packaged application must be published to local Artifactory

- This is part of our regular CI flow

- All artifacts must reside here

- No package managers allowed on production servers

- When deploying, the entire application should be deployable as is, one package picked up from Artifactory

- OS dependencies are already present during application deploy, all others should be contained in the application itself

- Managing private NodeJS dependencies

- Java / Maven projects already natively support this, so the idea was to keep the flow as close to this one as possible

- When Artifactory starts supporting NPM repositories, this might change

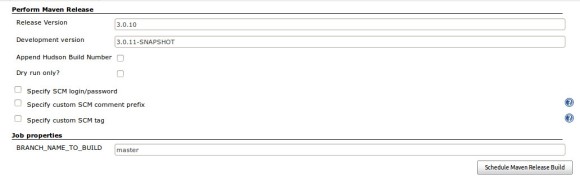

- Must use Maven Release plugin to well, perform releases

- On Jenkins, the idea is to use release new versions using this plugin

Some of these are imposed by our own work flow which may not fit yours, but it seems to me that the final solution is good and applicable to other ecosystems as well.

Since Maven was needed in the flow, both for managing dependencies and for performing releases from Jenkins, we needed to reconcile Maven project definition in pom.xml with NodeJS structure. The pom was required to support the following actions (within Maven life-cycle and Jenkins too):

- Install test and run-time dependencies

- They are needed on developer or Jenkins build machine

- And are installed to the project’s node_modules folder

- Install private dependencies

- From other in-house projects

- Create packages for deployment

- To be published to Artifactory from Jenkins

- Run tests

- In Jenkins readable format

Installing test and run-time dependencies is performed within Ant task:

<execution>

<id>compile</id>

<phase>compile</phase>

<configuration>

<tasks>

<echo message="========== installing public dependencies ===================" />

<exec executable="npm" dir="${project.basedir}" failonerror="true">

<arg value="install" />

</exec>

</tasks>

</configuration>

<goals>

<goal>run</goal>

</goals>

</execution>

So essentially, “npm install” is executed. At this time, using npm is OK since developer machines or CI build machines have internet access and can pull all those dependencies easily.

Private dependencies are a bit different. They reside in Artifactory and can’t be npm installed. Hence, we need to download them from Artifactory and unpack in the same folder as other dependencies. For that, Ant task can be used, but Maven already has an appropriate Maven Dependency Plugin which we’ll use here:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<version>2.6</version>

<executions>

<execution>

<id>unpack</id>

<phase>compile</phase>

<goals>

<goal>unpack-dependencies</goal>

</goals>

<configuration>

<outputDirectory>${project.basedir}/node_modules</outputDirectory>

<overWriteReleases>false</overWriteReleases>

<overWriteSnapshots>true</overWriteSnapshots>

<useSubDirectoryPerArtifact>true</useSubDirectoryPerArtifact>

<includeGroupIds>org.acme.test</includeGroupIds>

<stripVersion>true</stripVersion>

</configuration>

</execution>

</executions>

</plugin>

Packaging the project to be published on Artifactory for NodeJS i actually very easy. It consists from archiving all the code and that’s it 🙂 Here, node_modules is already filled with run-time and private dependencies (using the previous steps) and are referenced in application code so we could just pack the entire work-space and be done with it. Still, having all those files seemed a bit odd, so in the end I filtered out the not needed folders. Maven Assembly Plugin is used for that and the task looks like this:

<!-- assembly execution in pom.xml -->

<execution>

<id>make-zip-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

<configuration>

<finalName>${project.name}-${project.version}</finalName>

<appendAssemblyId>false</appendAssemblyId>

<descriptors>

<descriptor>assembly-zip.xml</descriptor>

</descriptors>

</configuration>

</execution>

<!-- assembly definition in assembly-zip.xml -->

<assembly>

<id>zip</id>

<formats>

<format>zip</format>

</formats>

<includeBaseDirectory>false</includeBaseDirectory>

<fileSets>

<fileSet>

<directory>.</directory>

<outputDirectory>/</outputDirectory>

<excludes>

<exclude>test/**</exclude>

<exclude>target/**</exclude>

<exclude>assembly*.xml</exclude>

<exclude>pom.xml</exclude>

</excludes>

</fileSet>

</fileSets>

</assembly>

The last step is to run the tests. Tests should also be JUnit compatible, so they can be published on Jenkins, and so that Jenkins can fail the build if tests don’t pass. For this example, I’ve been using nodeunit, but it can be easily applicable to any other testing framework you might be using. The only requirement is that the framework exports JUnit compatible results. Nodeunit supports this by passing in the “–reporter junit” parameter. Ant is used again:

<execution>

<id>test</id>

<phase>test</phase>

<configuration>

<tasks>

<echo message="========== running tests with JUnit compatible results ===================" />

<exec executable="nodeunit/bin/nodeunit" dir="${project.basedir}" failonerror="false">

<arg value="--reporter" />

<arg value="junit" />

<arg value="test/" />

<arg value="--output" />

<arg value="target/failsafe-reports" />

</exec>

</tasks>

</configuration>

<goals>

<goal>run</goal>

</goals>

</execution>

One more trick was needed in this Ant task to make tests visible to Jenkins. The output folder for the test results is “target/failsafe-reports”. If you don’t put them here, Jenkins will not pick up the test results.

This completes the Maven pom.xml configuration and the project is ready to be built on Jenkins. There, you just need to create a Maven build project and set it up as usual. The setup should include publishing created artifacts to Artifactory, Maven should perform “clean install” or similar goal that includes tests and of course the usual SCM (Git or whatever you are using) details. As you can see below, tests are executed and recognized, and releasing from Jenkins also works:

I’ve described only the main / deployable project configuration, but common dependency projects can be configured in the same manner. All you need to take care is the versioning of the artifacts since NodeJS standard versioning has nothing to do with the way Maven takes care of that. I guess one could also tweak NodeJS project version to be read from pom.xml, so everything stays dry. But, that is a tale for some other time.

Complete pom.xml configurations for deployable and depdency projects can be found here and here, so feel free to use in your projects. That’s it folks 🙂